In this article, I introduce a patent related to an instrument sound generation system based on a user’s performance posture, focusing on how bodily movement and posture are translated into a technically structured sound generation mechanism.

- Introduction: Connecting Body Movement and Musical Expression

- What the Patent Drawing Reveals About the Motion-Based Sound Interface

- How the Posture-Driven Sound Mechanism Works

- Benefits for Musicians, Students, and Creative Performers

- Engineering and Music-Interaction Considerations

- Patent Attorney’s Thoughts

- Application of the Technology: Posture-Encoded Sonic Expression and Embodied Signal Translation Systems

Introduction: Connecting Body Movement and Musical Expression

Musical instruments traditionally respond to finger movements or breath control. This patent drawing introduces a new dimension—a system that generates or modifies instrument sounds based on the performer’s body posture, turning motion and stance into musical input.

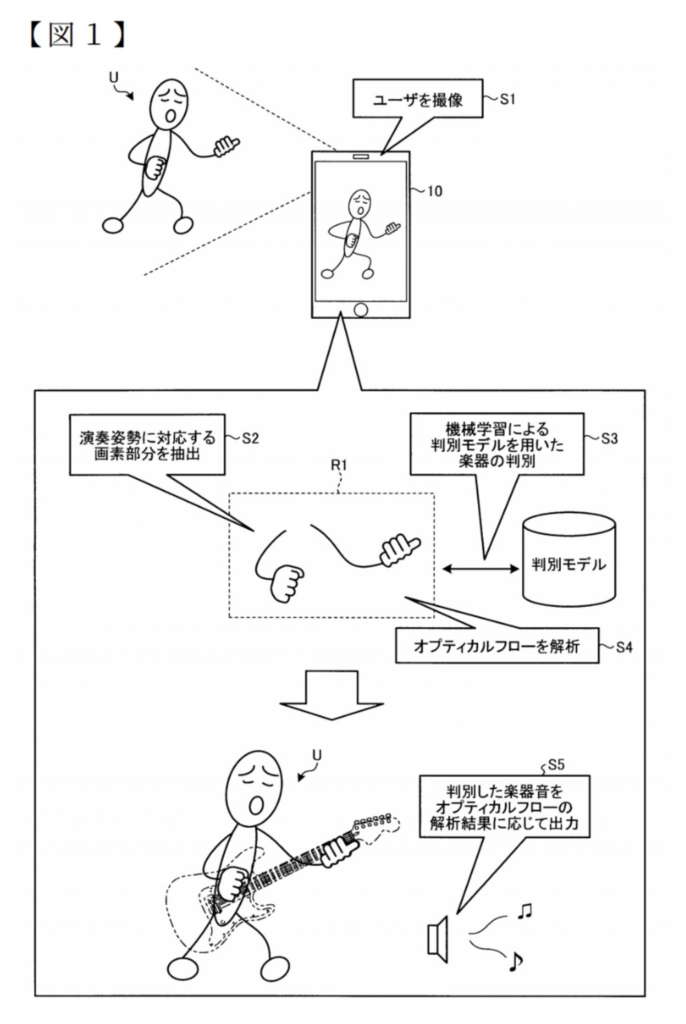

What the Patent Drawing Reveals About the Motion-Based Sound Interface

The illustration highlights a posture-detection system layered onto musical performance:

- Sensors placed on the performer’s arms, torso, or instrument

- A processing module that interprets posture angles

- A display or feedback panel showing posture quality

- Sound-generation logic that adjusts pitch, effects, or tone

- Suggested body position guidelines for optimal performance

The system captures the subtleties of human movement and translates them into sound.

How the Posture-Driven Sound Mechanism Works

The system blends physical form with sound production:

- User posture is detected through angle sensors or accelerometers

- The system maps posture values to sound parameters

- Good posture may boost tone quality or enable special effects

- Incorrect posture may trigger warnings or muted output

- Posture data can be recorded for practice evaluation

The performer’s entire body becomes part of the instrument.

Benefits for Musicians, Students, and Creative Performers

- Helps beginners learn proper playing posture

- Encourages ergonomic movement, reducing injury risk

- Enables expressive performance through body motion

- Useful for digital instruments, VR music, and live shows

- Enhances practice with real-time feedback

It merges physical accuracy with creative expression.

Engineering and Music-Interaction Considerations

Key design points include:

- Stable sensor calibration to avoid false posture detection

- Delay-free sound processing

- Clear posture visualization for learners

- Customizable mappings for different musical styles

- Comfort and safety of wearable sensors

Both biomechanics and musicality shape the system.

Patent Attorney’s Thoughts

Music is shaped not only by touch, but by presence.

By linking posture to tone, this invention transforms performance into a full-body dialogue—letting musicians shape sound with how they stand, move, and breathe.

Application of the Technology: Posture-Encoded Sonic Expression and Embodied Signal Translation Systems

Original Key Points of the Invention

- The system generates different musical tones depending on the performer’s body posture.

- Sensors detect tilt, orientation, limb angles, or center-of-gravity shifts.

- Posture acts as a control channel layered on top of conventional musical input.

- Enables expressive performance where bodily configuration influences sound.

Abstracted Concepts

- Translating body geometry into non-verbal output signals.

- Designing interfaces where posture becomes an information vector.

- Creating “embodied control layers” that augment traditional input methods.

- Using whole-body expression to shape non-physical outcomes (like sound).

Transposition Target

- A universal embodied-signal system where posture, micro-movements, and emotional stance modulate not only music, but interfaces for communication, creative tools, or environmental interactions.

Concrete Realization

A performer stands in a room with a posture-field interface.

Leaning forward brightens the room’s color tones; opening the chest expands sound textures; twisting the spine generates visual trails; grounding the feet slows environmental rhythms.

Speechless interactions become possible:

- Calm, vertical alignment creates soft atmospheric drones.

- Energetic posture sharpens light, accelerates tempo.

- Curved, gentle forms produce warm visual-sonic gradients.

The instrument evolves into a “posture-to-reality translator,”

allowing humans to sculpt audiovisual environments through the geometry of their bodies—

turning movement into music, space, and emotion.

↓Related drawing↓